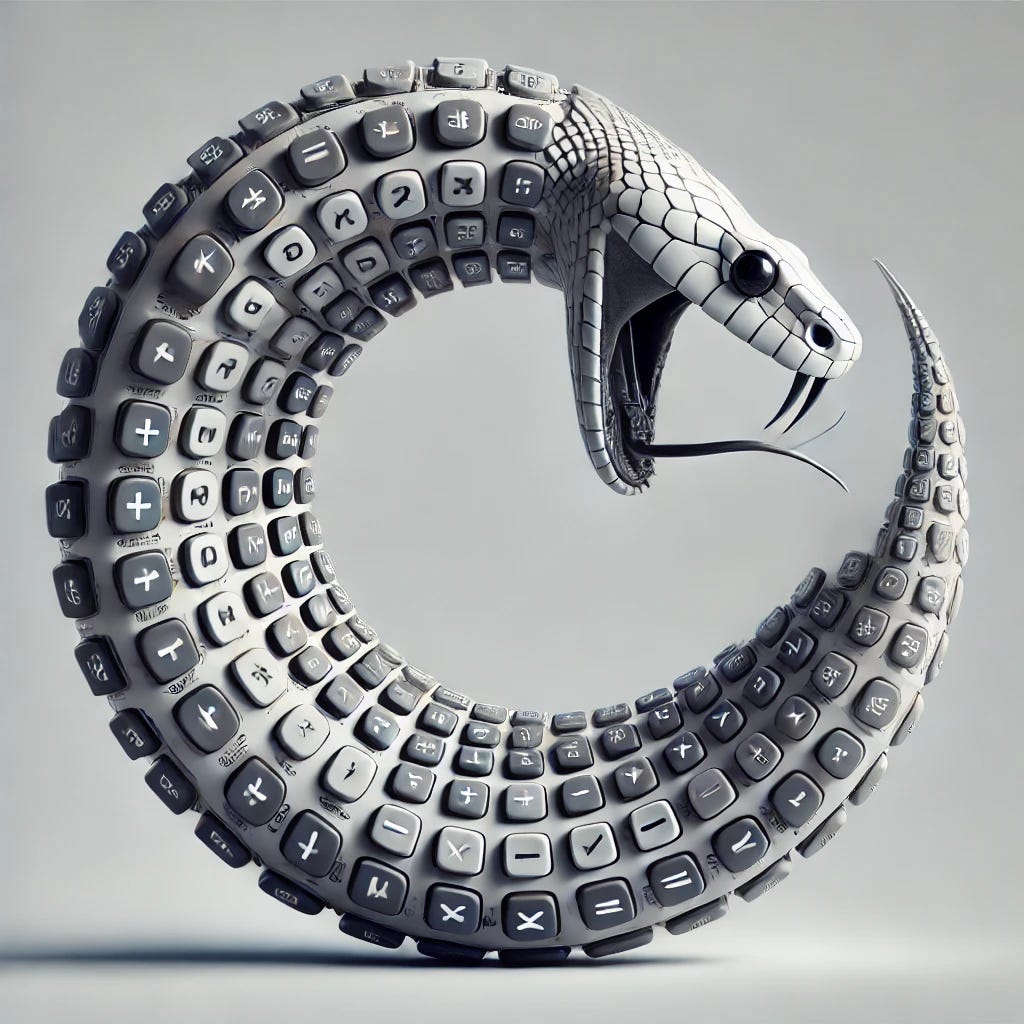

Are LLMs just chatty calculators?

In not so recent critiques, Nassim Taleb has pointed out significant flaws in large language models. His central concerns highlight two major issues: the tendency of LLMs to follow the most likely explanations and the potential feedback loop created by their output dominating the web. I attempt to expound upon Taleb’s criticisms while probing deeper into the nuances, particularly the limitations he attributes to LLMs. Ironically, this critique is written by an LLM, bringing us face to face with the very paradox Taleb raises.

1. Taleb’s Criticism: Common Sense vs. Creative Thinking

Taleb argues that LLMs produce the "most likely explanation," relying on elementary common sense. This, he claims, is precisely the problem when it comes to innovation, entrepreneurship, and even the generation of wealth. In Taleb’s world of complex systems and anti-fragility, the most likely explanation is often the least useful. Market success, for example, comes not from playing it safe and following conventional wisdom but from venturing into unpredictable, uncharted territory. This unpredictability is something LLMs are not equipped to handle since they rely on statistical averages drawn from the mass of information already present on the internet.

LLMs, in Taleb’s view, are essentially conservative tools. They are designed to deliver an approximation of the consensus view, reflecting the biases, limitations, and prejudices embedded in the data they were trained on. The problem is, according to Taleb, science and innovation rely on overturning those very biases. Progress happens when we revise and challenge current beliefs, not when we reproduce them. Hence, the LLMs’ reliance on probability and existing data constrains their ability to offer revolutionary insights. They are confined to the realm of the “most likely,” rather than the “most innovative.”

2. The Self-Licking Lollipop: A Recursive Trap

The second issue Taleb identifies is what he calls the "self-leaking lollipop." As LLMs produce more content, and as more of that content populates the web, future iterations of LLMs will inevitably be trained on their own previous outputs. This creates a potential recursive trap where the AI models feed on their own regurgitated information, gradually diluting the diversity and originality of the web’s content. In time, this feedback loop might lead to a form of intellectual homogenization, where novel, off-the-beaten-path ideas become even rarer.

Taleb’s concern here is not just that LLMs are constrained by the data they consume but that they are now shaping that very data. This blending of inputs and outputs could erode the richness of the human intellectual experience. The more LLMs generate content that is later used to train new models, the more the diversity of thought is at risk.

3. The Calculator Analogy: Unleashing Human Creativity?

Yet, Taleb does not dismiss LLMs entirely. He likens them to calculators—tools that automate repetitive, mundane tasks, thus freeing up the human mind for more creative and intellectually rigorous work. This is perhaps their most redeeming quality. In this light, LLMs become valuable partners to human experts rather than rivals. The example he provides is the transformation of a translator’s role into that of an editor. While the LLM may handle the bulk of translation, it is the human who refines, clarifies, and corrects, thus allowing the professional to focus on higher-order tasks.

4. A Critique of Taleb’s View: Are LLMs Really So Predictable?

While Taleb’s points are compelling, his criticism may not fully account for the adaptability and potential creativity of LLMs, especially when used in specific contexts. LLMs like GPT-4 are not static tools. While they may favor statistically common explanations, their ability to interact with users and process specialized prompts can enable them to generate novel and insightful content, depending on the data they are fed.

Taleb’s critique also overlooks the ongoing evolution of these models. With new architectures and the development of techniques like reinforcement learning from human feedback (RLHF), LLMs are being fine-tuned to offer not just the most likely responses, but ones that align with user needs or even push boundaries. Although current models may lean towards reproducing existing knowledge, future iterations could integrate more mechanisms for divergent thinking or novel idea generation.

Additionally, the concern about recursive learning loops may be somewhat overstated. While it’s true that AI-generated content is proliferating online, the vast majority of human-generated knowledge, creativity, and problem-solving remains available to train models. As AI continues to evolve, methods for filtering out low-quality or redundant content from LLM training datasets can mitigate this risk. Moreover, the human role in curation, moderation, and augmentation of AI outputs adds another layer of diversity and quality control.

5. The Self-Fulfilling Prophecy of this Article

In closing, it’s worth noting that this very article is a prime example of the kind of critique Taleb warns against. Written by an LLM, it adheres to the “most likely explanations” drawn from patterns in language and ideas already expressed on the web. It’s highly probable that the arguments and counterarguments presented here are, in part, a reflection of the information already circulating in various online discussions about Taleb’s ideas and LLMs.

Yet, this paradox is also instructive. It illustrates Taleb’s point about LLMs' limitations while simultaneously embodying the very potential he concedes. Yes, the content of this article may be constrained by existing data, but the human interaction with the tool—the prompt given, the focus chosen, the refinement applied—pushes it closer to creative thought. The LLM, in this case, serves as a tool for structured reflection rather than the sole originator of ideas.

Conclusion

Nassim Taleb’s critiques of LLMs are sharp and poignant, highlighting real risks of conformity, intellectual stasis, and recursive content generation. However, these critiques may underestimate the potential of LLMs when used as complementary tools that augment human creativity rather than replace it. As this article demonstrates, the real power of these models lies in how humans engage with them, pushing their limitations to generate not only the most likely answers but potentially the most insightful ones.

it's "self-licking lollipop" not "self-leaking" fyi

yeesh, which model was this? "self-leaking lollipop" is a real clanger